Deflation & Defensibility

Capital Efficient #8

Welcome to the latest edition of Capital Efficient. Let’s get into it.

Vertical AI Defensibility

"Why won't OpenAI just do this?" is the question every vertical AI founder fields from skeptical investors. Karpathy’s 2025 LLM Year in Review offers a useful rebuttal. Using Cursor as his case study, he extrapolates to explain the broader app-layer opportunity. In short, "LLM apps" provide real value through:

Context Engineering: A best-in-class vertical AI platform pulls in all relevant context, structures it optimally for the task, includes pre-built expert-level prompts, and lets users skip the “setup” phase entirely.

Orchestration: Quality models are proliferating fast. How many startups were making calls to Gemini six months ago vs. just OAI/Anthropic? Knowing which model excels at which task is increasingly complex. Vertical AI apps need to orchestrate calls across multiple LLMs, optimized for each step of a given workflow.

UX: ChatGPT’s empty text box and Claude Code’s black terminal don’t give typical business users much to work with. The best vertical apps harness LLM power for discrete industries and make it simple for workers to incorporate AI into their day-to-day.

Autonomy: AI outputs aren't perfect. Depending on the task, a human-in-the-loop is necessary. Vertical apps should let users make the tradeoff between automation, quality, and efficiency, choosing when to let AI run autonomously vs. when to double-check its work. This flexibility is key for real-world adoption.

My view: the labs will make immense money providing inference to this next wave of apps. Think of foundation models as raw commodities and vertical AI as the finished product. Vertical AI companies are already showing they can generate revenue faster than cloud-era software businesses. Every company needs to leverage AI, but horizontal models and their UX won’t cut it for most workflows.

What Karpathy calls “LLM Apps” (and what I call Vertical AI) is how AI actually gets deployed in the economy beyond prosumer and generalist use cases.

Model Cost Deflation + Anticipatory Building

The lesson of the last three years: build ~50% ahead of model capabilities, use the best available models to wow customers, and assume costs will fall. Cursor did exactly this and is now the fastest-growing software company in history. When they started building AI code gen, foundation models couldn’t reliably produce junior-level code. But they were positioned to capture the market as models improved.

This is counterintuitive for founders trained on cloud-era SaaS economics, where gross margin was sacred from day one. In AI, that logic inverts. The companies winning right now are the ones spending aggressively on inference to deliver a product that feels like magic, even if unit economics look ugly in the early innings. The bet is that cost curves will bail you out, and so far that bet has paid off every time. Founders who optimize for margin too early end up with a mediocre product that loses to a competitor willing to burn more on compute.

Customers always want the best model. Model pricing collapses over time. So max out quality over margin. Worst case, you lower model quality later to cut costs and drive up margin at scale. And by then the “discount” model will be better than today’s SOTA.

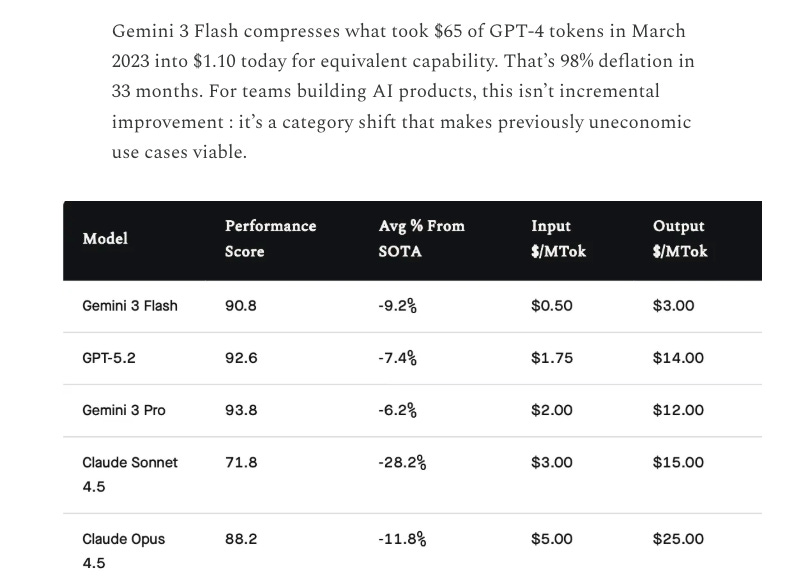

For a data-driven breakdown of AI input cost deflation, check out Tomasz Tunguz’s Gemini 2.5 Flash analysis here. I included an excerpt below from his great piece which drives the point home.

Source: https://substack.com/home/post/p-181936170.

Deals

Late December isn’t known as the hottest time for deal announcements, but a few caught my eye.

Chai Discovery: Chai raised a $130MM Series B led by Oak HC/FT and General Catalyst, as well as a slew of other A-list investors. AI drug discovery isn’t a vertical I’ve spent much time on, but it has been gobbling up VC dollars. If it works, the medical breakthroughs could be world-changing. One thought I’ve found myself coming back to: how far into development do these companies take the drugs vs. when do they sell them off to the majors? My bet is some go all the way - starting off by partnering before developing their own drugs to compete against the Eli Lillys and Pfizers of the world.

Lovable: Running away with the title of Sweden’s most valuable startup, Lovable raised a $330MM Series B at a reported $6.6B valuation. The round was led by Capital G and Menlo Ventures. Lovable surpassed $200MM in ARR last year and is now aiming for the enterprise with the goal of becoming “the last piece of software” a company ever buys. I am not sure even the founders believe that, but it’s a hell of a narrative.

What I Am Reading

Raising A Series A in an AI First World: Solid founder service from Susan Liu at Uncork with spot-on advice for startup leaders thinking about raising an A in today’s market. The short of it: if you aren’t pre-empted, and aren’t growing very fast, be prepared to do a Seed-II. Well worth a read.

Paying $3 for $1: The Google Doc Guru himself, Chris Paik, uses game theory to break down the dynamics at the frothier end of today’s private markets. His view is that the music stops once the public markets won’t accept AI asset prices, but given the proliferation of late-stage capital and tendency of tech co’s to stay private indefinitely, I can’t really say when that will be.

How The Phone Ban Saved High School: NY Mag on the positive impact of NYS banning phones in schools. It was the phones after all. If Yondr were public, I’d be piling into it - there’s no future where phones aren’t eventually banned in schools nationwide as this gets replicated.

And if you’ve made it this far, I truly appreciate the readership and hope you have a great and restful holiday break. Until next year, thanks for reading.

Nailed the deflation point. Building 50% ahead of capabilities and banking on cost curves is exactly the opposite of traditional SaaS margin discipline, but its working. I've watched teams hesitate on inference spend only to get lapped by competitiors who shipped magic-tier UX first and worried about unit economics later. The tradeoff between autonomy and human-in-the-loop is where real adoption happens, not in demos. Cursor proved thsi by letting developers stay in control while AI does the grunt work, rather than promising full automation nobody trusts yet.